When I think of large datacenters, I think of rows and rows of servers in a remote warehouse, all humming away 24×7. Amazon, Google, Apple – all rely on having their datacenters running 24×7 and can’t afford for them to go offline.

If you’ve ever been in a datacenter, you were probably shocked at just how loud it is. Servers are loud. They’re loud for a reason – they generate lots of heat, and it takes a lot of cooling to keep them running. I often find it amusing when TV shows have scenes set in server rooms that are whisper quiet. Believe me, there’s nothing more eerie than a quiet datacenter as it’s a sure sign that something has gone drastically wrong!!

The processors in modern servers have large heatsinks attached to them to draw the heat away from their surface. Without these heatsinks they’d quickly overheat and either shut down, or break. Fans are then used to cool the heatsinks and push the hot air out of the server. Typically, cool air enters the front of a server and hot air exits out the back.

Great – so the server stays cool, but what about the room? If you have racks and racks, all filled with servers, each with multiple processors, you end up with a lot of heat! The simplest solution is air cooling. You may have a heat pump in your home to cool you in the summer months? Datacenters have huge air conditioners to remove the heat from the room and cool it down.

I used to be responsible for a small (comparatively) server room of around 80 servers. In this room we had 4 large air conditioners running 24×7 – enough to keep things reasonably cool if one of them was to fail. Unfortunately, we did experience all 4 units going offline at the same time due to a power problem. I experienced first-hand how quickly the room heated up without cooling. Within mere minutes, the room was almost unbearable as we battled to shut down each of the 80 servers before they started failing! That wasn’t a fun day!

Now with the large datacenters that companies such as Google use, where they have thousands of servers, air cooling isn’t an option. Here you need to use liquid cooling as this is more efficient at whisking away the heat. With liquid cooling, all of the server racks are connected with pipes that act like a radiator in your car. By pumping coolant around the pipes, the temperature is maintained much more efficiently.

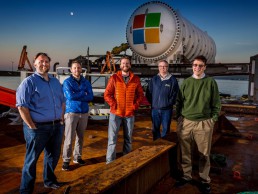

Here’s where Microsoft’s experiment is taking the next step. They believe it’s going to be more efficient, and cheaper to place a datacenter under water to aid with cooling. This way you have an abundance of cool water to help cool things down! It’s a radical idea with pros and cons, but it may just be a sign of things to come.

The datacenter they’re experimenting with is part of a project called “Natick” and was recently launched (quite literally) off the coast of Northern Scotland in Orkney. I’ve lived on the North of Scotland and can attest to the water being cold there!

Orkney was also chosen because of their abundance of renewable energy – readily available to power the experiment. They partnered with the European Marine Energy Centre (EMEC) who’re based in Orkney to develop the experiment.

Project Natick involves submerging a sealed datacenter with only power and data connections back to the shore.

There’s no way in and out of the datacenter, so if any servers suffer hardware problems, there is no way to get in there and fix them. However, because Microsoft removes the oxygen and humidity from the environment, they think the risk of failure is a lot lower due to lack of corrosion.

It’s an interesting experiment and I look forward to seeing the results in a few years. Microsoft reckons that it’ll be a lot more cost effective and easier to add additional under-water pods as demand for processing increases. This is a good thing, as our demands for ever faster internet services keeps growing. They estimate around 90 days to deploy an underwater datacenter whereas it could take years to accomplish the same on land.

[…] Microsoft’s Underwater DatacenterJune 11, 2018What better place than under the sea to cool down a bunch of servers! […]